The situation

Last year, my old Geforce 1060 3GB video card started dying. It will randomly hang up X.org server, lock down keyboard and mouse leaving a very disheartening line in systemd journal: “GPU has fallen off the bus”. Restart the computer would have the card working again, sometimes for a few hours, or even a few days days only for it to randomly “fell” again.

I keep using that card for the best part of a years alternating between an extremely old Geforce 750GT when I needed a stable computer and the faulty 1060 when I needed GPU power. Earlier this year, I gave up and bought an old Radeon RX 570 4G and the experience with open source AMD GPU is so much better than NVIDIA proprietary driver that I decided to switch to team all red.

I would need all applications of a video card, Encoding capability to record and edit lectures in case of online teaching, training deep learning with pytorch or tensorflow to teach Machine Learning courses and for my own Pre-PHD research, accelerated normal computer work on a dual 4K and 2K monitors, gaming would stand at the end of that list. A lots of people would normally be pointing at NVIDIA 3000 series card. But with almost two decades of using 4 nvidia cards with all the up and down. I’m in need of a change before I got bored to death.

The options

Because of those large monitors combines with a tendency to forget exiting applications and the need for deep learning training, I would need high VRAM. NVIDIA weirdly equipped their GTX 3070 and 3070Ti with just 8GiB of VRAM, that push the old 3060 12GiB and the Radeon 6700XT 12GiB aheads of other GPU choices for me. At the months of the purchase tandoanh.vn seems to have the best prices for GPU in my neighborhood.

The 3070 Ti is going at around 20 millions with only 8GB VRAM

The old 3070 (no Ti suffix) is going at around 16.4 millions,

The old non-TI 3070 look very tempting but it’s going at 2 million dongs higher than 6700XT with only 8GiB RAM so I went for cheaper options instead. The strongest contender from team green for me was the old non-TI Geforce 3060 with 12G VRAM

The price is noticeably cheaper than my choice of 6700XT, which was going at 14.590.000 VND, the VRAM is the same and the brand power of NVIDIA in GP-GPU world may come back to haunt me with lots of buyers’ regret. Still, I was skeptical of the LHR restriction in NVIDIA driver and the years of neglect from NVIDIA toward the Linux software stack discourage me this time. So my choice was driven not only by months of reading review and number crunching, but also by the dopamine of being different and the ecstasy of trying something new.

I decided to go for the MSI Radeon™ RX 6700XT MECH 2X 12G OC – 12GB GDDR6

The Card itself

The card is bigger than the twin cooler design made it to be. Much higher than the PCI expansion slot, the width is about three slots and it’s quite long too.

One things I missed from the image is that the card got only 3 heat pipes. However the heat sink seems very heavy and adequate.

The two massive fan only spin when they are needed and then go back idle,

One of the small PCI slot is completely cover by the heatsinks, that made this card essentially a 3-slots-occupant.

The driver

System monitoring

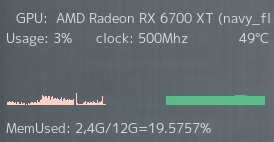

To use AMD card with linux nowadays is just plug and play. The driver is already in the kernel and I can get 4K resolution even in the plymouth theme. The information about the card usage can be gather from text file in /sys/class/drm/card0 so I wrote a small conky config to watch the card Utilization, Clock speed and Memory utilization.

${GOTO 20}GPU: ${exec glxinfo| grep "renderer string" | cut -d: -f2 | cut -c1-31}

${GOTO 10}Usage: ${exec cat /sys/class/drm/card0/device/gpu_busy_percent}% ${GOTO 100}clock: ${exec grep -Po '\d+:\s\K(\w+)(?=.*\*$)' /sys/class/drm/card0/device/pp_dpm_sclk} ${alignr}${exec echo "$((`cat /sys/class/drm/card0/device/hwmon/hwmon*/temp1_input ` / 1000))" }°C

${GOTO 10}${execgraph "cat /sys/class/drm/card0/device/gpu_busy_percent" 50,100 E9897E E9897E} $alignr ${execgraph "echo $(cat /sys/class/drm/card0/device/mem_info_vram_used /sys/class/drm/card0/device/mem_info_vram_total) | awk '{printf $1/$2*100}' " 50,100 1B7340 1B7340}

${GOTO 10}MemUsed: ${exec numfmt --to=iec < /sys/class/drm/card0/device/mem_info_vram_used}/${exec numfmt --to=iec < /sys/class/drm/card0/device/mem_info_vram_total}=${exec echo $(cat /sys/class/drm/card0/device/mem_info_vram_used /sys/class/drm/card0/device/mem_info_vram_total) | awk '{printf $1/$2*100}'}That would render into a serviceable block, not pretty but informative.

Rocm – Radeon Open compute

This is the AMD’s equivalent of NVIDIA CUDA, It’s famous for all the wrong reasons, my card is too new to get supported officially. Only Navi 21 cards (6900XT and 6800XT) code name gfx1030 is officially supported. Running /opt/rocm/bin/clinfo on my card would yeild:

Platform ID: 0x7fb1a23f14b0

Name: gfx1031

Vendor: Advanced Micro Devices, Inc.

Device OpenCL C version: OpenCL C 2.0

Driver version: 3423.0 (HSA1.1,LC)

Profile: FULL_PROFILE

Version: OpenCL 2.0

Extensions: cl_khr_fp64 cl_khrSearch for the error on the ROCm github repository lead to a closed issues (https://github.com/RadeonOpenCompute/ROCm/issues/1668) repeating the fact that this card is not officially supported telling users to try their luck compile the ROCm software stack from source with the variable AMDGPU_TARGETS=gfx1031

Even though there is a archlinux AUR package to compile rocm from source I want to use rocm inside docker containers so that all those Deep learning python package won’t pile up in my root directory structure. This complicated things quite a bit compiling rocm from source took more than half an hours and a warping 20GiB of RAM to complete. My computer can do that but it’s not a process I would want to repeat over and over when I try to create and debug inside a docker container.

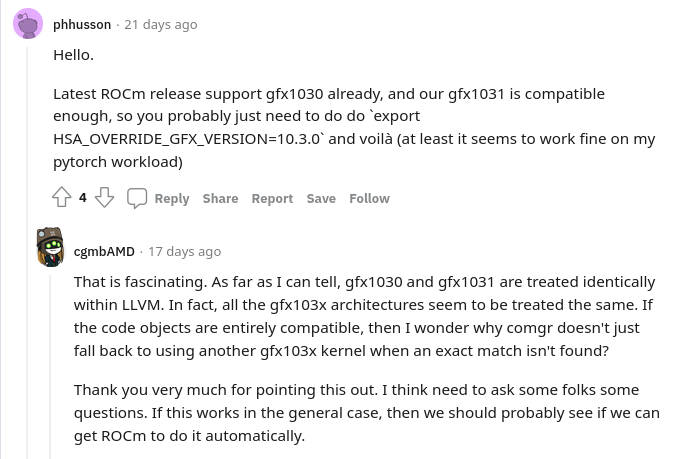

Luckily, after 1 week of searching, I stumbled upon this reddit post:

One users in that thread pointed out to me the simple fact that I can use the official build of ROCm and the force it to skip the card detection process and use the official binary for the GFX1030 cards. That binary is similar enough to work on my cards and the entire process is just settings one environment variable:

export HSA_OVERRIDE_GFX_VERSION=10.3.0With this magic environment variable, I was able to get rocm working with both tensorflow and pytorch. The benchmark of rocm on 6700XT and the installation of AMD’s advanced media framework to activate the card’s video encoder would be a tale for another time.